NETtalk: differenze tra le versioni

Nessun oggetto della modifica |

Nessun oggetto della modifica |

||

| Riga 5: | Riga 5: | ||

Anno di creazione: [[AnnoDiCreazione::metà anni '80]] | Anno di creazione: [[AnnoDiCreazione::metà anni '80]] | ||

URLHomePage: [ | URLHomePage: [https://archive.ics.uci.edu/dataset/150/connectionist+bench+nettalk+corpus HomePage NETtalk] | ||

Pubblicazione: [[Pubblicazione::Parallel Networks that Learn to Pronounce English Text]] | Pubblicazione: [[Pubblicazione::Parallel Networks that Learn to Pronounce English Text]] | ||

Versione delle 14:37, 10 apr 2024

Nome: NETtalk

Sigla: NETtalk

Anno di creazione: metà anni '80

URLHomePage: HomePage NETtalk

Pubblicazione: Parallel Networks that Learn to Pronounce English Text

Basato su: Rete Neurale Feed-Forward (FNN)

Modello implementato negli anni '80 dalla ricerca di Terrence Sejnowski and Charles Rosenberg e uno dei primi esempi di applicazione delle reti neurali e di applicazione delle teorie del connettivismo .

Questo modello, partendo da un dataset etichettato che associa circa 20000 parole inglesi alla loro trascrizione fonetica, dimostrò di essere in grado di generalizzare a parole sconosciute. Dal paper:

English pronunciation has been extensively studied by linguists and much is known about the correspondences between letters and the ele- mentary speech sounds of English, called phonemes 183). English is a par- ticularly difficult language to master because of its irregular spelling. For example, the "a" in almost all words ending in "ave", such as "brave" and "gave", is a long vowel, but not in "have", and there are some words such as "read" that can vary in pronunciation with their grammatical role. The problem of reconciling rules and exceptions in converting text to speech shares some characteristics with difficult problems in artificial intelligence that have traditionally been approached with rule-based knowledge repre- sentations, such as natural language translation

Architettura

Da wikipedia:

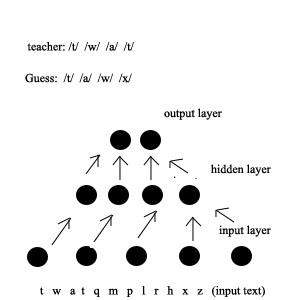

- input: vettore da one-hot da 29 categorie per sette lettere introdotte in modalità sliding window

- layer nascosto: 80 unità

- layer di output: 26 unità, rappresentazione fonetica della quarta lettera della sequenza di input

Representations of letters and phonemes. The standard network had seven groups of units in the input layer, and one group of units in each of the other two layers. Each input group encoded one letter of the input text, so that strings of seven letters are presented to the input units at anyone time. The desired output of the network is the correct phoneme, associated with the center, or fourth, letter of this seven letter "window". The other six letters (three on either side of the center letter) provided a partial context for this decision. T he text was stepped through t he window letter-by-letter. At each step, the network computed a phoneme, and after each word the weights were adjusted according to how closely the computed pronunciation matched the correct one.

Esempi di input

action @kS-xn 1<>0<< 0 activate @ktxvet- 1<>0>2<< 0 active @ktIv- 1<>0<< 0 activity @ktIvxti 0<>1<0<0 0

Links

Paper Originale: Parallel Networks that Learn to Pronounce English Text